AI breakthrough? Researchers give robotic hand 100 years of virtual training

- Ken Ecott

- Oct 19, 2018

- 3 min read

How long does it take a robotic hand to learn to juggle a cube? About 100 years, give or take.

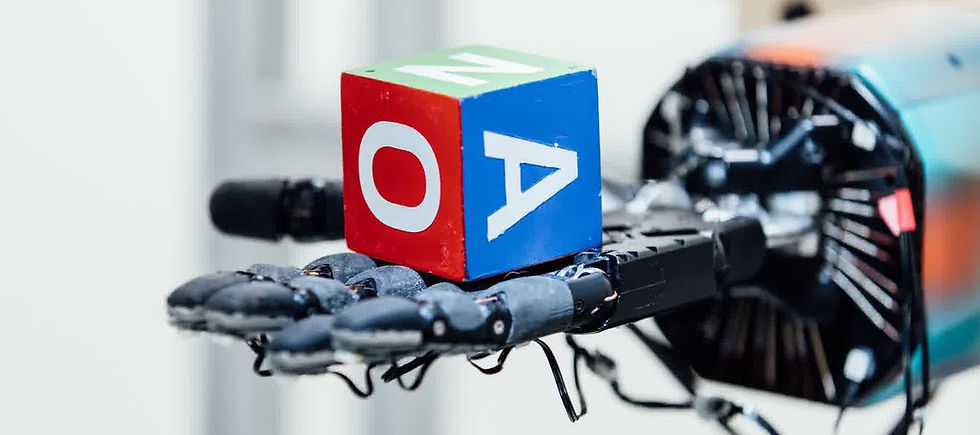

AI researchers from non-profit organisation OpenAI have created a system that allows a Robot hand to learn real world moves in virtual training environment. The robotic hand has learned to manipulate physical objects with unprecedented dexterity, with no human input.

OpenAI’s researchers, who are backed by Tesla Inc founder Elon Musk and Silicon Valley financier Sam Altman have made significant advances in the field of training robots in simulated environments in order to solve real-world problems more swiftly and efficiently than was possible before.

The team paid Google $3,500 to run its software on thousands of computers simultaneously, using 6,144 CPU cores and eight GPUs to train the robot hand, OpenAI was able to amass the equivalent of one hundred years of real-world testing experience in just 48 hours.

Their robotic hand system, known as Dactyl (From the Greek daktylos, meaning finger), uses the humanoid Shadow Dexterous Hand from the Shadow Robot Company. The hand successfully taught itself to rotate a cube 50 times in succession, thanks to a reinforcement learning algorithm.

The algorithm uses a machine-learning technique known as reinforcement learning. Dactyl was given the task of manoeuvring a cube so that a different face was upturned. It was left to figure out, through trial and error, which movements would produce the desired results.

This required Dactyl to learn various manipulation behaviours for itself, including finger pivoting, sliding, and finger gaiting.

An OpenAI blog post explains how the research team placed a cube in the palm of the robot hand and asked it to reorient the object. Dactyl did so using just the inputs from three RGB cameras and the coordinates of infrared dots on its fingertips, testing its findings at high speed in a virtual environment before carrying them out in the real world.

In training, every simulated movement that brought the cube closer to the goal gave Dactyl a small reward. Dropping the cube caused it to feel a penalty 20 times as big.

The robot software repeats the attempts millions of times in a simulated environment, trying over and over to get the highest reward. OpenAI used roughly the same algorithm it used to beat human players in a video game, "Dota 2."

Reinforcement learning is inspired by the way animals seem to learn through positive feedback. It was first proposed decades ago, but it has only proved practical in recent years thanks to advances involving artificial neural networks. The Alphabet subsidiary DeepMind used reinforcement learning to create AlphaGo, a computer program that taught itself to play the fiendishly complex and subtle board game Go with superhuman skill.

Other robotics researchers have been testing the approach for a while but have been hamstrung by the difficulty of mimicking the real world’s complexity and unpredictability. The OpenAI researchers got around this by introducing random variations in their virtual world, so that the robot could learn to account for nuisances like friction, noise in the robot’s hardware, and moments when the cube is partly hidden from view.

OpenAI's goal is to develop artificial general intelligence, or machines that think and learn like humans, in a way that is safe for people and widely distributed.

Musk has warned that if AI systems are developed only by for-profit companies or powerful governments, they could one day exceed human smarts and be more dangerous than nuclear war with North Korea.

Further reading: OpenAI’s Dota 2 defeat is still a win for artificial intelligence

Comments